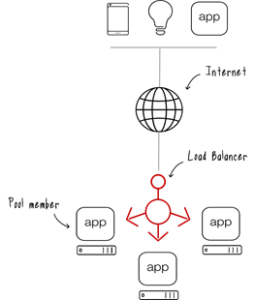

Firstly let’s see what is load balancing;

It’s mainly distributing work of a computer to two or more similar computers. This ensures reliability and increases the responsiveness of the system.

Load balancer generally group in to two categories.

Layer 4: Act in network and transport layer protocols (IP, TCP, FTP, and UDP)

Layer 7: Distribute requests based upon data found in application layer protocols such as HTTP

Also Load Balancer (LB) reside in two types, (more information)

Hardware LB: F5 BIG-IP, Cisco, Citrix

Software LB: NGINX, HAProxy, LoadMaster

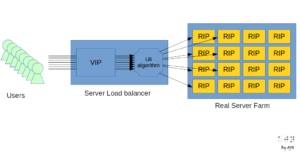

All these different load balancers uses different algorithms to distribute the load among the application pool. Some of the industry standard Load Balancing algorithms are:

- Round robin: This method continuously rotates a list of services that are attached to it. When the virtual server receives a request, it assigns the connection to the first service in the list, and then moves that service to the bottom of the list.

- Least connections: The default method, when a virtual server is configured to use the least connection, it selects the service with the fewest active connections.

- Least response time: This method selects the service with the fewest active connections and the lowest average response time.

Now that’s all about the very basic theory in Load balancing. Now let’s see how Server side load balancing works in the real world.

Server Side Load Balancing

A Server side load balancer sits between the client and the server farm accepting incoming network and application traffic and distributing the traffic across multiple backend servers using various methods. Mostly load balancer will check the health of the server pool underneath and use the algorithm which we discussed earlier to distribute the load. This was the most common mechanism we used in the past to manage our application load. However the upswing of Client Side Load Balancing is now on the peak. Let’s dig deep on that context.

Client Side Load Balancing

As we discussed, in the server side load balancing, a middle component is responsible for the distributing the client requests to the server. However that middle component is moving out on the decision making in the load distribution. Client itself will decide on the server it need to forward the request. How it work is very simple: Client holds the list of server IPs that It can deliver the requests. Client select an IP from the list randomly and forward the request to the server.

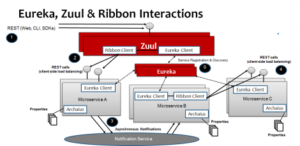

With the microservice architecture, Client side load balancing plays a major role. Services like Netflix Ribbon and Eureka components helps client side load balancing have similar features to server side load balancing like fault tolerance, caching and batching.

Let’s see how Eureka and Ribbon work together to achieve the client side load balancing in microservice architecture.

As per the above diagram, let’s assume Microservice B wants to communicate with Microservice C. So Microservice B is the client and now it will use the Eureka client and get what nodes (server list) are available in Microservice C. Then using ribbon client in the Microservice B, it will call the Microservice C using default round robin algorithm. So the method which Microservice B used to call Microservice C is known as client side load balancing. Which illustrates that client is the one decide on the server which need to call not a middle component like in Server side load balancing.

All the links are provided as references to get more inside on the topic we have covered. Especially how you can use Netflix OSS to achieve client side load balancing in microservice solutions.

References

- https://www.citrix.com/glossary/load-balancing.html

- https://www.digitalocean.com/community/tutorials/what-is-load-balancing

- https://dzone.com/articles/spring-cloud-netflix-load-balancer-with-ribbonfeig

- https://www.nirmata.com/2014/08/13/getting-started-with-microservices-using-netflix-oss-docker/

- https://cciethebeginning.wordpress.com/category/load-balancing/